For the last few years, the dominant narrative in customer service and aftersales was simple: AI would replace people. Chatbots would deflect volume, GenAI would answer everything instantly, and service costs would fall sharply.

Many organizations acted on this belief. They deployed virtual agents, copilots, and automation across customer service and aftersales operations. Productivity improved. Response times came down. Yet when leaders looked at the P&L, the results were far less convincing. Cost-to-serve reductions were modest, escalations often increased, and human effort merely shifted instead of disappearing.

In my experience, the problem was never that AI “didn’t work.” The problem was where AI was applied and what it was expected to replace. If embedded into execution rather than layered on top of broken processes, AI can genuinely improve after-sales performance.

Most AI investments in aftersales improved productivity but failed to deliver material ROI. The root cause was structural: AI optimized conversations and individual tasks but did not carry decisions through the service value chain.

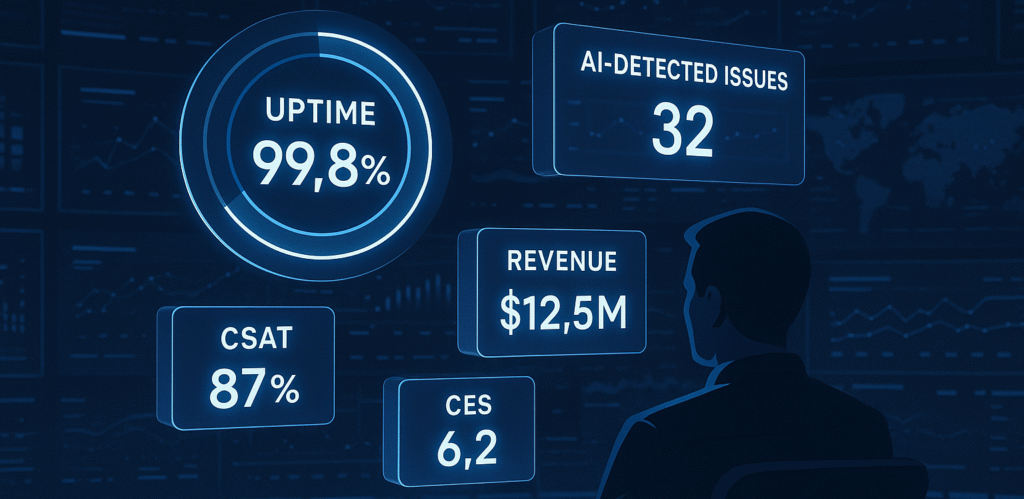

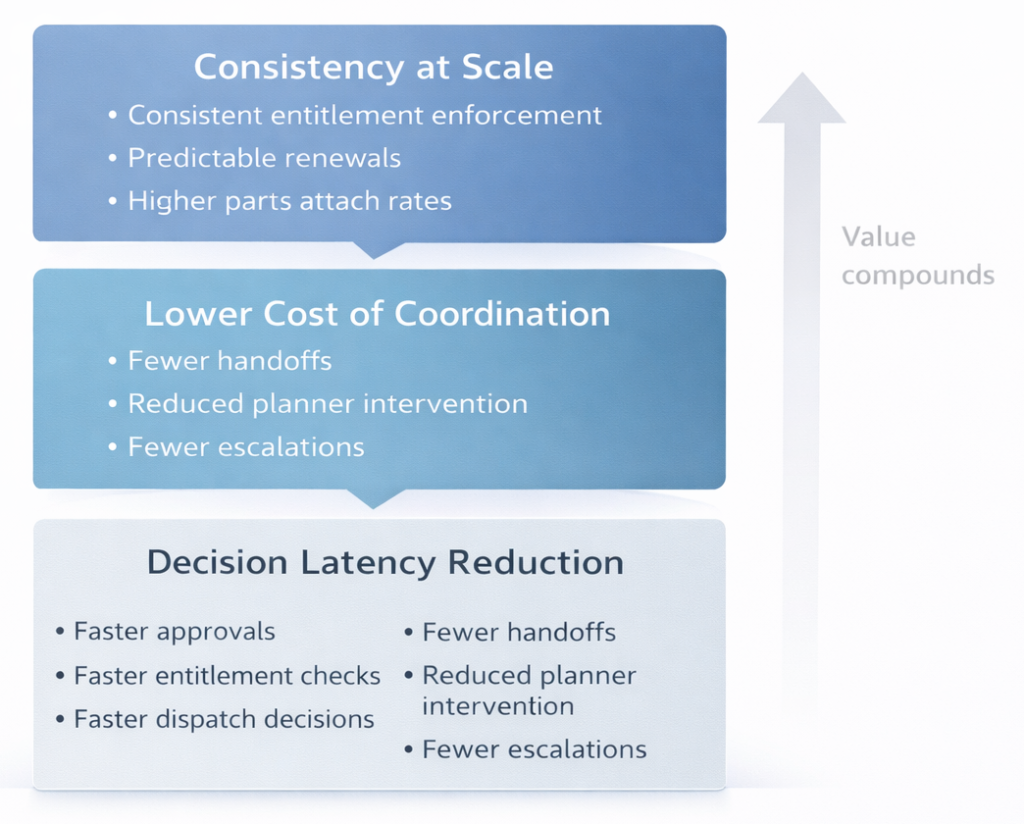

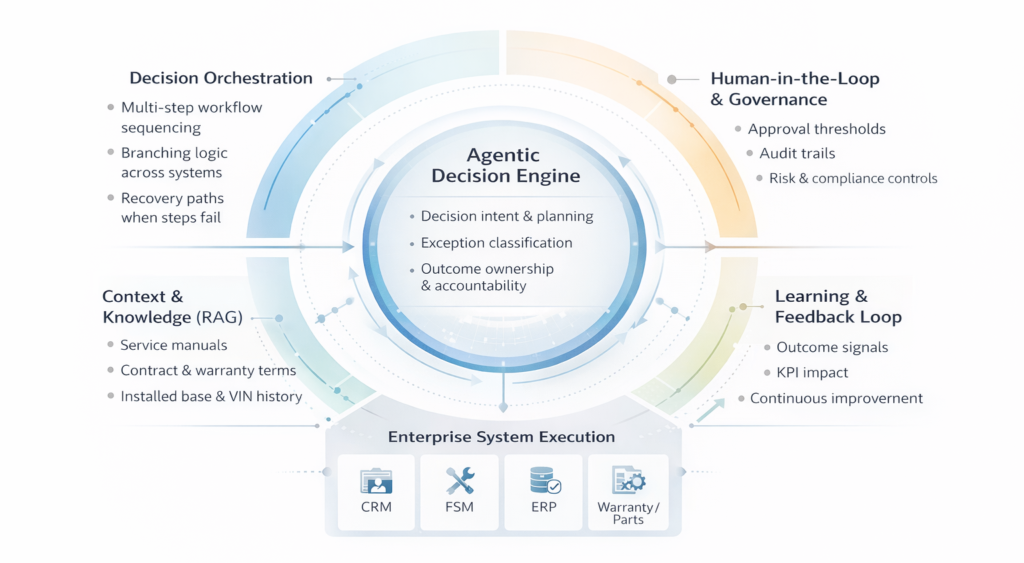

Agentic AI changes this equation by orchestrating decisions end-to-end i.e. across warranty, parts, dealers, and enterprise systems. The business case is incremental, not disruptive. Value comes from reducing decision latency, lowering the cost of coordination, and enforcing consistency at scale, while keeping humans firmly in the loop for high-risk exceptions.

Organizations that are structured enough to absorb autonomy and are constrained by human-dependent coordination see the most reliable returns.

From “AI Will Replace Customer Service” to Why Value Never Fully Showed Up

Early AI initiatives focused almost entirely on front-line interactions. The logic was intuitive: if customer questions could be answered faster and more accurately, downstream efficiency would follow.

In practice, aftersales operations are shaped less by conversations and more by coordination. A resolved chat does not guarantee correct entitlement handling. A strong diagnosis does not ensure the right part is dispatched. Even with automated intake and routing, humans remain responsible for reconciling decisions across CRM, FSM, ERP, and warranty systems.

AI performed well at answering questions. It struggled with decision continuity.

AI didn’t fail because it couldn’t understand customers. It failed because it couldn’t carry decisions through the service system. That distinction explains why so many pilots stalled and why leaders are now far more cautious about scaling GenAI initiatives.

Aftersales Economics: Where Cost-to-Serve Leakage Really Occurs Today

Most modern service organizations have already automated intake, routing, and basic triage. Platforms like Salesforce, ServiceNow, and OEM-specific FSM tools handle the “happy path” reasonably well.

The real leakage today occurs after automation hands off control.

Cost-to-serve erosion increasingly comes from what happens after automation hands off control. When variability increases or context is incomplete, decisions stall or fragment, and humans step in to compensate.

The most common sources of leakage include:

- Exception-heavy processes: Automated flows handle the happy path, but a significant share of service volume still falls into exceptions that require manual coordination.

- Planner and supervisor intervention: Dispatch optimization, parts substitution, and rescheduling often depend on experienced planners reconciling system constraints.

- Repeat visits and rework: Frequently caused by incomplete upstream decisions rather than technician capability.

- Escalations driven by uncertainty: Customers escalate when outcomes are unclear, increasing handling cost and management overhead.

Automation exists. Decision continuity does not.

Why Copilots Plateau in Aftersales Environments

GenAI copilots have undeniably improved individual productivity. Agents resolve cases faster. Technicians access knowledge more easily. Supervisors get quicker insights. These gains are real, but plateau quickly in complex service environments. The narrative that AI would replace customer service was always flawed; in reality, AI will redefine it by shifting value from conversation to execution.

Before examining why copilots fall short, it is important to recognize what they are designed to do: assist humans, not replace the coordination role humans play today. As volume grows or variability increases, humans still absorb the cognitive load of stitching outcomes together across systems. Recommendations require validation. Exceptions accumulate. Decision ownership remains unclear.

Aftersales does not suffer from a lack of intelligence. It suffers from a lack of consistent decision execution at scale.

This structural limitation explains why copilots deliver local efficiency gains but struggle to move system-level metrics such as service margin, FTFR, or cost-to-serve.

The Incremental Value Stack of Agentic AI in Aftersales

Agentic AI creates value not by being “smarter,” but by changing how decisions move through the organization. Its impact is incremental, cumulative, and economic in nature.

At the most basic level, Agentic AI reduces decision latency. Many service delays are caused not by complexity, but by waiting either for approvals, entitlement checks, parts confirmation, or for human availability. Agentic systems autonomously sequence these steps, retrieve the required context, and move decisions forward. The business impact is shorter cycle times, lower backlog, and improved asset uptime.

The second layer of value comes from reducing the cost of coordination. In most organizations, humans act as the glue between systems. Planners reconcile constraints. Supervisors resolve conflicts. Agents chase downstream actions. Agentic AI takes ownership of this coordination, reducing the number of touchpoints required to move a case from intake to resolution.

The third layer of value is consistency at scale, particularly on the revenue side. Revenue leakage in aftersales rarely occurs because contracts are poorly designed; it occurs because enforcement is inconsistent. This is why service revenue often remains untapped even when organizations believe they have a clear strategy in place.

Agentic systems apply rules uniformly, trigger renewals predictably, and surface monetization opportunities based on asset and service context.

Together, these layers form the incremental value stack: faster decisions, cheaper coordination, and more reliable execution. In automotive and industrial service, faster decisions ultimately translate into improved uptime, which remains the KPI that really matters in service.

What It Takes to Build an Agentic System in Practice

Despite the terminology, Agentic AI is not a single model or product. It is an operating capability built on top of existing enterprise systems.

At the core sits a decision orchestration layer that decomposes work into steps, determines next actions, and handles branching logic. Frameworks such as LangChain and workflow engines are commonly used here. Surrounding orchestration is the integration and execution layer. Agentic systems must read from and write to CRM, FSM, ERP, and warranty platforms. Without execution, insight has no economic value.

Equally important is the governance and trust layer. Aftersales decisions carry financial, contractual, and sometimes safety implications. Human-in-the-loop controls, auditability, and clear autonomy boundaries are non-negotiable.

Finally, learning loops allow agentic systems to improve based on outcomes rather than prompts, which is why value tends to compound over time rather than peak early.

Cost–Benefit Reality Check: What Agentic AI Really Costs

Most of the cost associated with Agentic AI is not AI-specific. It reflects the effort required to make decisions explicit, executable, and auditable. This is usually the work that humans have historically absorbed informally.

| Cost Component | Typical Range (USD) | What This Covers | Sample Tools |

|---|---|---|---|

| Data & integration readiness | 150k–500k | Asset models, entitlements, FSM, ERP, CPQ, etc. integration | Informatica, MuleSoft, Boomi |

| Orchestration & agent logic | 100k–300k | Decision flows, exception handling | LangChain, n8n, workflow engines |

| Platform & model runtime | 75k–250k | LLM usage, compute, monitoring | Azure OpenAI, Bedrock |

| Governance & controls | 50k–150k | Auditability, HITL, approvals | Custom workflows |

| Change & operating model | 50k–150k | Adoption, ownership | Adoption, ownership |

Mature organizations can often implement agentic capabilities at lower cost, but they also face the greatest organizational resistance, because value comes from changing decision ownership, not adding new tools.

ROI Depends on Maturity and the Ability to Absorb Autonomy

The ROI from Agentic AI is often misunderstood because it is not driven by technical capability alone. It is driven by an organization’s ability to absorb autonomy.

This creates a counterintuitive dynamic: Organizations with the highest theoretical upside often realize the least immediate value, while those with more structure realize value faster, but with a lower ceiling.

The most accurate way to think about ROI is therefore not “high vs low maturity,” but potential value vs realized value over time.

Fragmented or Reactive Organizations

Potential value: Very high

Realized value (near term): Low to moderate

Why this happens

In fragmented environments, agentic systems immediately surface hidden complexity: inconsistent data, unclear decision ownership, undocumented exceptions, and conflicting process logic. While this creates an opportunity for structural improvement, it also overwhelms the organization’s ability to absorb change.

Instead of reducing effort, agents initially expose how much human coordination was compensating for weak foundations. As a result, exceptions increase before they decline, and humans are pulled back in to stabilize outcomes.

Typical outcome

- Year 1 impact is often cost-neutral

- ROI depends on follow-on process standardization and data cleanup

- Value is real, but delayed and uneven

Standardized, KPI-Driven Organizations (Sweet Spot)

Potential value: High

Realized value: High

Why this happens

These organizations already have repeatable decisions, visible KPIs, and basic process discipline. What constrains performance is not the lack of intelligence, but the cost and latency of coordination with planners, supervisors, and specialists stitching decisions together across systems.

Agentic AI thrives here because it replaces human-dependent coordination, not human judgment. Decisions propagate faster, exceptions are handled consistently, and variability is reduced without destabilizing operations.

Typical outcome

- 5–10% service margin improvement

- Measurable cost-to-serve reduction

- Clear attribution to reduced rework, escalations, and planner effort

Highly Optimized Organizations

Potential value: Moderate

Realized value: Predictable

Why this happens

In highly mature environments, many coordination problems have already been addressed through process design, tooling, and governance. Agentic AI therefore does not unlock new value pools; it optimizes existing ones. The value comes from incremental efficiency gains, selective headcount reduction, and improved resilience, and not from transformational shifts.

Typical outcome

- Greater operational stability at scale

- Incremental margin improvement

- Reduced dependency on scarce experts

If your aftersales operation relies on people to remember, reconcile, and rescue decisions, agentic AI will expose that dependency before it eliminates it.

What This Means for the Future of AI in Customer Service

The future of AI in customer service is not more conversation. It is better decisions executed reliably.

Customer experience improves when outcomes are predictable, escalations are rare, and promises are consistently kept. Agentic AI improves customer service indirectly, by fixing the system behind it.

Agentic AI is not about replacing customer service teams. It is about removing people as the bottleneck in repeatable decisions.

If service outcomes depend entirely on humans being available, perfectly informed, and endlessly consistent, they will never scale. Agentic AI changes that equation incrementally, credibly, and economically.